-

Notifications

You must be signed in to change notification settings - Fork 193

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

containercluster: can't disable workloadIdentityConfig once enabled

#437

Comments

|

Hi @jonnylangefeld, looking now. Could you please elaborate on this issue's impact on you? For example, is this issue is a blocker, friction point, or nice-to-have? |

|

Thanks for your reply @jcanseco. While the issue of disabling workload identity was merely a friction point, we now saw this issue also on other fields, that are actually blocking us from rolling out a controller in production right now. However, we cannot disable it via Config Connector. The same thing as described above happens. When the field is removed from the custom resource, the Config Connector controller just adds it back in right away. And I can see that it gets removed initially after my patch by watching the watch command. kubectl scale --replicas 0 -n cnrm-system statefulset/cnrm-controller-managerAnd then applied my patch via kubectl patch containernodepool/generic-2 -p '{"spec":{"autoscaling":null}}' --type=mergeNow checking the autoscaling setting: kubectl get containernodepool generic-2 -o jsonpath={.spec.autoscaling}actually shows that it's removed. (Same thing works via a just brings the original autoscaling setting right back in from the actual GCP resource. But in this case I obviously want the kubernetes resource to be the source of truth because that's the one that was just edited. Here the original apiVersion: container.cnrm.cloud.google.com/v1beta1

kind: ContainerNodePool

metadata:

annotations:

cnrm.cloud.google.com/management-conflict-prevention-policy: none

cnrm.cloud.google.com/project-id: my-project

name: generic-2

namespace: nodepools

spec:

autoscaling:

maxNodeCount: 1000

minNodeCount: 0

clusterRef:

external: paas-jonny1-dev-us-west1

initialNodeCount: 1

location: us-west1

management:

autoRepair: true

autoUpgrade: true

maxPodsPerNode: 64

nodeConfig:

diskSizeGb: 500

diskType: pd-ssd

imageType: COS

labels:

instance-family: e2

node-pool-deployment: generic-1

node-pool-type: generic

machineType: e2-standard-32

metadata:

disable-legacy-endpoints: "true"

minCpuPlatform: AUTOMATIC

oauthScopes:

- https://www.googleapis.com/auth/monitoring

- https://www.googleapis.com/auth/devstorage.read_only

- https://www.googleapis.com/auth/logging.write

serviceAccountRef:

external: [email protected]

shieldedInstanceConfig:

enableIntegrityMonitoring: true

enableSecureBoot: true

tags:

- allow-health-checkers

- paas-gke-node

- paas-jonny1-dev-us-west1

workloadMetadataConfig:

nodeMetadata: GKE_METADATA_SERVER

nodeCount: 0

nodeLocations:

- us-west1-a

- us-west1-b

upgradeSettings:

maxSurge: 20

maxUnavailable: 0

version: 1.17.17-gke.1101This was tested on KCC version and |

|

Hi @jonnylangefeld, we're very sorry you ran into these scenarios on those Container resources. Ideally, you should be able to disable Workload Identity and Autoscaling in the ways you defined, so we're working on improving that behavior for Config Connector. In the meantime, our recommended workaround is to remove the fields from your config, manually disable those fields on GCP, and then trigger a reconcile. However, we're aware that that workaround is infeasible given the number of clusters/nodePools you might have. We found one workaround for the

Like we mentioned in our sync, we're working on improving our field deletion/disabling user experience, so if you run into other scenarios, we value any additional data points you can provide to help us improve! |

|

Thank you @caieo for the update! In that case we wouldn't go with the workaround for |

|

@jonnylangefeld, unfortunately, our fix will be specific to the |

|

I see. So next week's release will specifically target |

Checklist

Bug Description

I created a simple

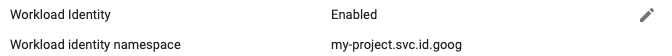

containerclusterresource with workload identity enabled.╰─ kubectl get containercluster paas-jonny1-dev-us-west1 -o jsonpath={.spec.workloadIdentityConfig} {"identityNamespace":"my-project.svc.id.goog"}This all works and on GCP, I see that it's enabled for my cluster:

But now it doesn't work to disable it on an existing cluster.

I'm trying to disable by running

╰─ kubectl patch containercluster/paas-jonny1-dev-us-west1 -p '{"spec":{"workloadIdentityConfig":null}}' --type=merge containercluster.container.cnrm.cloud.google.com/paas-jonny1-dev-us-west1 patchedBy watching the

containerclusterin a separate window viawatch kubectl get containercluster paas-jonny1-dev-us-west1 -o jsonpath={.spec.workloadIdentityConfig}I see that my patch command actually removes the

.spec.workloadIdentityConfigas expected, but right after thecnrm-controller-manageradds it back in (probably because it takes what's on GCP as source of truth).If I explicitly set

spec.workloadIdentityConfigtonull, I would expect that the feature gets disabled on GCP. I have also tried setting it to{}instead ofnull, but that doesn't work either.Additional Diagnostic Information

Kubernetes Cluster Version

Config Connector Version

Config Connector Mode

Log Output

Steps to Reproduce

Steps to reproduce the issue

The text was updated successfully, but these errors were encountered: