At Google, we believe in the power of collaboration and open research to drive innovation, and we're grateful to see Gemma embraced by the community with millions of downloads within a few short months of its launch.

This enthusiastic response has been incredibly inspiring, as developers have created a diverse range of projects like Navarasa, a multilingual variant for Indic languages, to Octopus v2, an on-device action model, developers are showcasing the potential of Gemma to create impactful and accessible AI solutions.

This spirit of exploration and creativity has also fueled our development of CodeGemma, with its powerful code completion and generation capabilities, and RecurrentGemma, offering efficient inference and research possibilities.

Link to Youtube Video (visible only when JS is disabled)

Gemma is a family of lightweight, state-of-the-art open models built from the same research and technology used to create the Gemini models. Today, we're excited to further expand the Gemma family with the introduction of PaliGemma, a powerful open vision-language model (VLM), and a sneak peek into the near future with the announcement of Gemma 2. Additionally, we're furthering our commitment to responsible AI with updates to our Responsible Generative AI Toolkit, providing developers with new and enhanced tools for evaluating model safety and filtering harmful content.

PaliGemma is a powerful open VLM inspired by PaLI-3. Built on open components including the SigLIP vision model and the Gemma language model, PaliGemma is designed for class-leading fine-tune performance on a wide range of vision-language tasks. This includes image and short video captioning, visual question answering, understanding text in images, object detection, and object segmentation.

We're providing both pretrained and fine-tuned checkpoints at multiple resolutions, as well as checkpoints specifically tuned to a mixture of tasks for immediate exploration.

To facilitate open exploration and research, PaliGemma is available through various platforms and resources. Start exploring today with free options like Kaggle and Colab notebooks. Academic researchers seeking to push the boundaries of vision-language research can also apply for Google Cloud credits to support their work.

Get started with PaliGemma today. You can find PaliGemma on GitHub, Hugging Face models, Kaggle, Vertex AI Model Garden, and ai.nvidia.com (accelerated with TensoRT-LLM) with easy integration through JAX and Hugging Face Transformers. (Keras integration coming soon) You can also interact with the model via this Hugging Face Space.

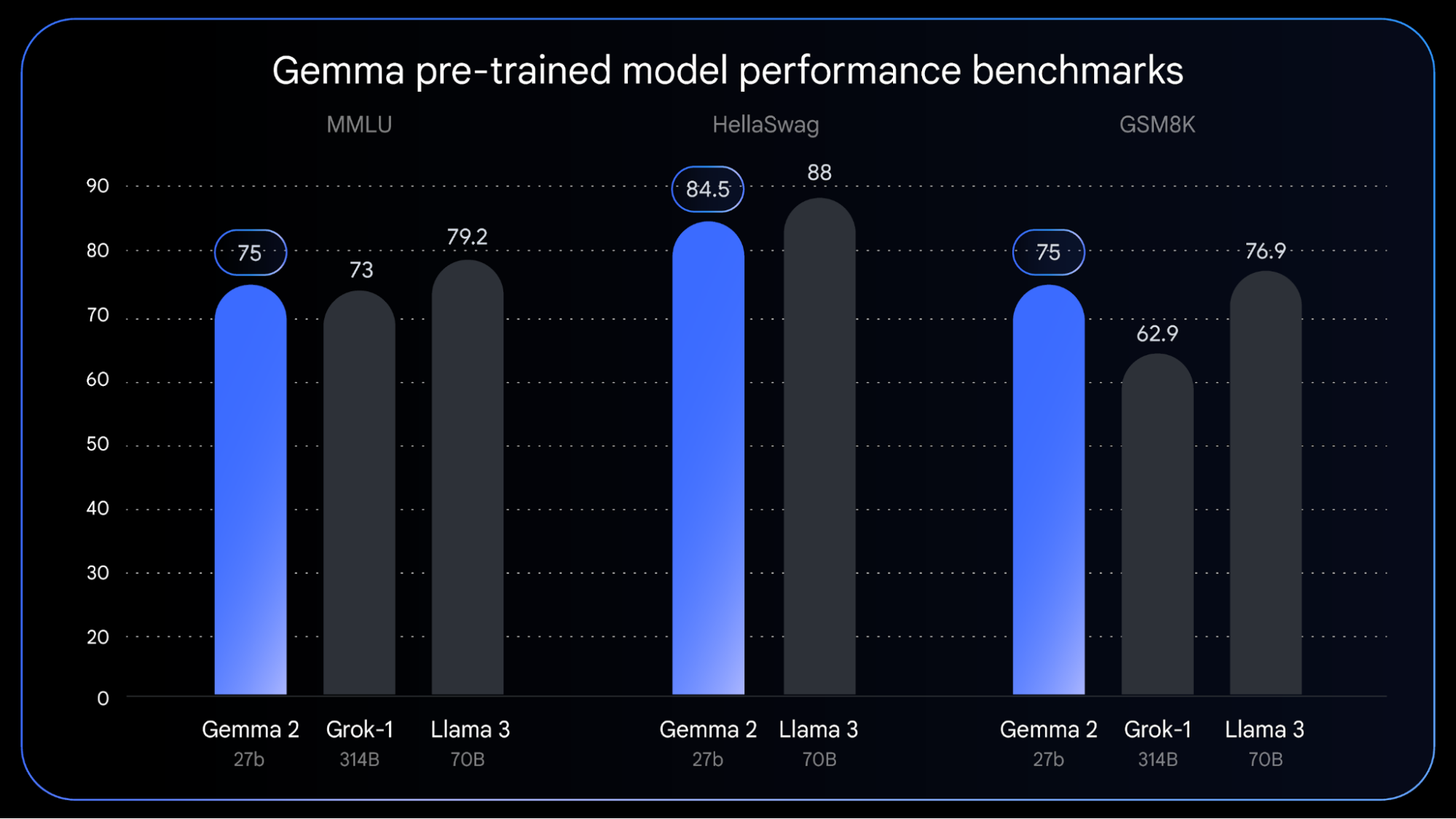

We're thrilled to announce the upcoming arrival of Gemma 2, the next generation of Gemma models. Gemma 2 will be available in new sizes for a broad range of AI developer use cases and features a brand new architecture designed for breakthrough performance and efficiency, offering benefits such as:

Stay tuned for the official launch of Gemma 2 in the coming weeks!

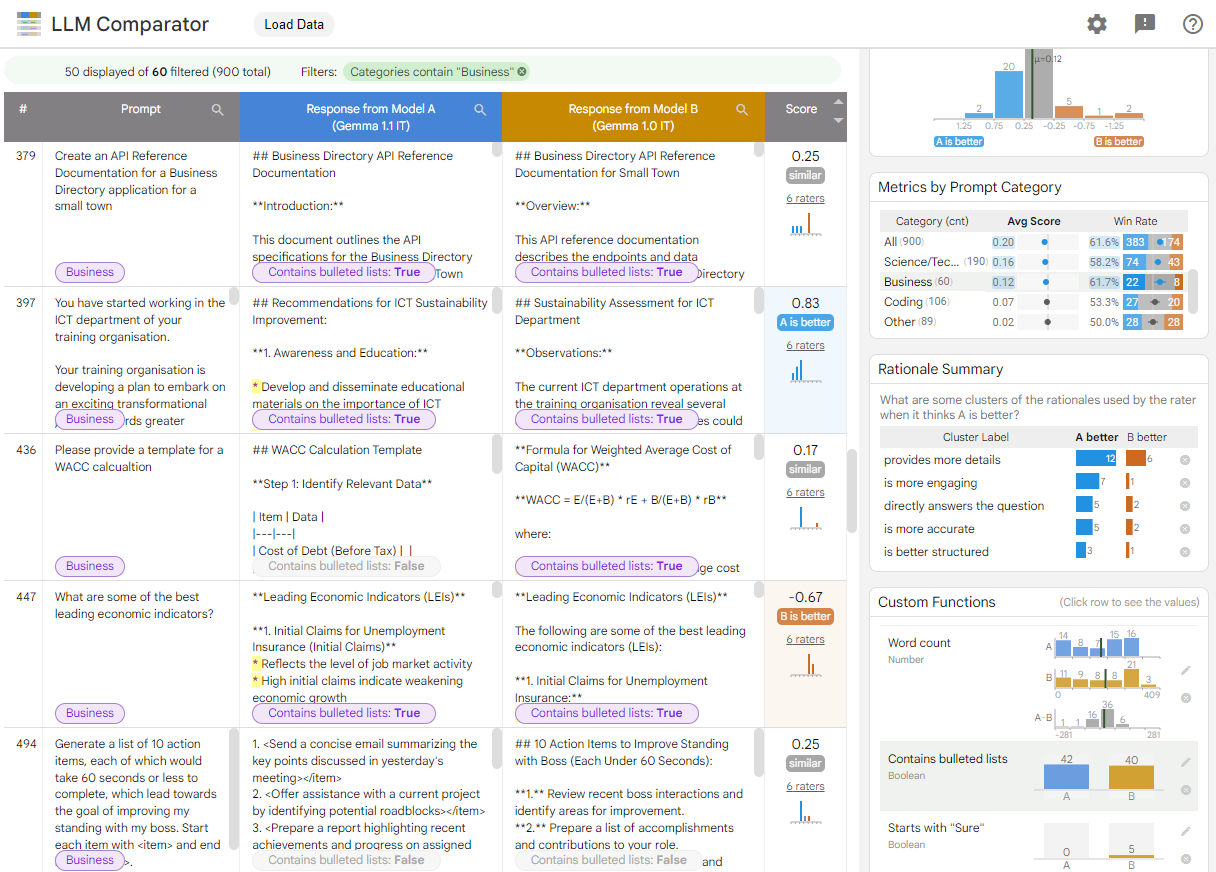

For this reason we're expanding our Responsible Generative AI Toolkit to help developers conduct more robust model evaluations by releasing the LLM Comparator in open source. The LLM Comparator is a new interactive and visual tool to perform effective side-by-side evaluations to assess the quality and safety of model' responses. To see the LLM Comparator in action, explore our demo showcasing a comparison between Gemma 1.1 and Gemma 1.0.

We hope that this tool will advance further the toolkit’s mission to help developers create AI applications that are not only innovative but also safe and responsible.

As we continue to expand the Gemma family of open models, we remain dedicated to fostering a collaborative environment where cutting-edge AI technology and responsible development go hand in hand. We're excited to see what you build with these new tools and how, together, we can shape the future of AI.