Use service accounts

Some data sources support data transfer authentication by using a service account

through the Google Cloud console, API, or the bq command line. A service

account is a Google Account associated with your Google Cloud project. A service

account can run jobs, such as scheduled queries or batch processing pipelines by

authenticating with the service account credentials rather than a user's

credentials.

You can update an existing data transfer with the credentials of a service account. For more information, see Update data transfer credentials.

The following situations require updating credentials:

Your transfer failed to authorize the user's access to the data source:

Error code 401 : Request is missing required authentication credential. UNAUTHENTICATEDYou receive an INVALID_USER error when you attempt to run the transfer:

Error code 5 : Authentication failure: User Id not found. Error code: INVALID_USERID

To learn more about authenticating with service accounts, see Introduction to authentication.

Data sources with service account support

BigQuery Data Transfer Service can use service account credentials for transfers with the following:

- Cloud Storage

- Amazon Redshift

- Amazon S3

- Campaign Manager

- Dataset Copy

- Google Ad Manager

- Google Ads

- Google Merchant Center

- Google Play

- Scheduled Queries

- Search Ads 360

- Teradata

- YouTube Content Owner

Before you begin

- Verify that you have completed all actions required in Enabling BigQuery Data Transfer Service.

- Grant Identity and Access Management (IAM) roles that give users the necessary permissions to perform each task in this document.

Required permissions

To update a data transfer to use a service account, you must have the following permissions:

The

bigquery.transfers.updatepermission to modify the transfer.The predefined

roles/bigquery.adminIAM role includes this permission.Access to the service account. For more information about granting users the service account role, see Service Account User role.

Ensure that the service account you choose to run the transfer has the following permissions:

The

bigquery.datasets.getandbigquery.datasets.updatepermissions on the target dataset. If the table uses column-level access control, the service account must also have thebigquery.tables.setCategorypermission.The

bigquery.adminpredefined IAM role includes all of these permissions. For more information about IAM roles in BigQuery Data Transfer Service, see Introduction to IAM.Access to the configured transfer data source. For more information about the required permissions for different data sources, see Data sources with service account support.

For Google Ads transfers, the service account must be granted domain-wide authority. For more information, see Google Ads API Service Account guide.

Update data transfer credentials

Console

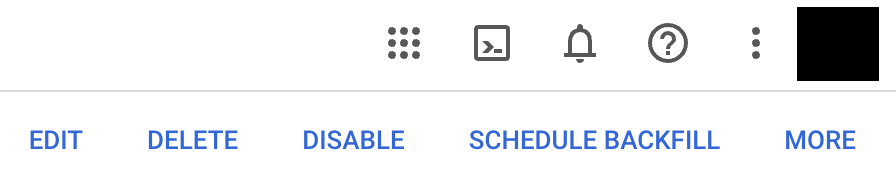

The following procedure updates a data transfer configuration to authenticate as a service account instead of your individual user account.

In the Google Cloud console, go to the Data transfers page.

Click the transfer in the data transfers list.

Click EDIT to update the transfer configuration.

In the Service Account field, enter the service account name.

Click Save.

bq

To update the credentials of a data transfer, you can use the bq command-line tool to update the transfer configuration.

Use the bq update command with the --transfer_config,

--update_credentials, and --service_account_name flags.

For example, the following command updates a data transfer configuration to authenticate as a service account instead of your individual user account:

bq update \

--transfer_config \

--update_credentials \

--service_account_name=abcdef-test-sa@abcdef-test.iam.gserviceaccount.com projects/862514376110/locations/us/transferConfigs/5dd12f26-0000-262f-bc38-089e0820fe38 \

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.