Choosing the right BI tool for the right use case at Better.com

Kenny Ning

Data Engineer, Better.com

I used to work at a very large company whose dashboarding tool evolution went from custom tool #1, to custom tool #2, to Tableau. I immediately fell in love with how beautiful the visualizations looked in Tableau.

When I first started at Better.com, I saw that they were using an open-source tool for dashboarding. Naturally, I suggested we bring on Tableau. To me, the pros were obvious:

Encourage exploratory analysis

Data was mostly being used for reporting at the time. I felt that Tableau would help evolve us from a pure reporting data culture to an exploratory analysis and insights-driven data culture.I had already had experience with Tableau

I was very comfortable as a Tableau user and felt confident I could teach the rest of the analytics community (~12 or so people at the time)Add a level of trust to core metrics

The open source tool had quite limited visualization options, which made our core metrics dashboards look very sketchy. Good data visualization improves trust in the data, and Tableau has great visualizations.

During this time we also considered a seemingly similar tool called Looker. However, we didn't take the time to do a full evaluation of the platform, and here's why we didn't think we needed to:

- We didn’t think there was much of a difference between Tableau and Looker

- We knew Tableau was the gold standard for visualization, so why would we look anywhere else?

- Why should I have to learn another query language?

- We thought Tableau’s pricing model would fit our use case better

- We already had one Tableau “expert” versus no Looker experts

Two months after my start date, I got what I wanted: a signed Tableau contract. Smooth sailing onwards, right?

Why Tableau didn’t work at Better.com

We decided to administer Tableau ourselves

As a financial services company, data security is very important to us. For this reason, we decided to host Tableau Server on our own stack instead of outsourcing to Tableau. I had never had experience administering a dashboarding platform before, and Tableau did not make this easy.

For instance, every time we ran out of licenses, we’d need to manually get in contact with our account rep, re-negotiate the re-up number and then manually re-deploy those on our server.

Tableau technical support was also very spotty. Time to first response would sometimes take weeks. On top of that, since we were running Tableau on-premise, we’d have to package a massive log file to send them to review for every issue, further extending time to resolution.

Data extracts were difficult to work with

Based on past experience, I knew that live connections with Tableau were a poor experience that resulted in really jagged dashboard UIs. The best performance of Tableau requires using data extracts, where you essentially compress your source data into a local “data extract” that Tableau dashboards have a better time of consuming.

However, trying to schedule regularly refreshing extracts presented several challenges. To address one of these challenges, we had to create yet another ETL process to manage (i.e. extracting data from our Redshift data warehouse, transforming it into an extract, and loading it to our server). Tableau’s API for managing this was cumbersome to use and poorly documented.

People wanted to know more details behind a dashboard

Like many financial services companies, our business stakeholders are fairly comfortable with data and are not satisfied with just viewing dashboards. They want to understand the assumptions, see the underlying code (if relevant), and extend it into a different direction.

A strong, curious data culture is incompatible with Tableau for two reasons:

- Tableau doesn’t make the SQL of the underlying data source transparent. Business users were accustomed to seeing the SQL behind a dashboard in our old tool. This was typically how they would build trust in what they were looking at.

- Because these users didn’t have trust in the dashboards, they’d build their own using their own basic (but hacky) SQL skills, which in the end would just create more confusion.

The analytics community was exploding in size

Someone once told me Tableau is a great “single-player game”. If you’re the only one making dashboards, it’s a dream. Heck, I’d say it’s still pretty sweet if you have up to ten dashboard creators who are in lock step with each other.

However, the experience of doing analysis vastly changes when you go from one team producing analysis to ten teams and hundreds of people producing analysis.

Some requirements for a large analytics community that we weren't able to accomplish with Tableau included:

- Collaborating on a single dashboard

There’s no concept of parallel publishing here. You have to resort to taking turns downloading the workbook from the server and re-publishing. - Version control of logic

What happens if a metric gets calculated incorrectly because you put in some bad SQL logic? How do you know what change was responsible and how do you revert those changes? - Reusability of components

One of the data tables referenced in 50 data extracts changed its name and now you have to manually update each extract or else every downstream dashboard will fail to load.

Why Looker was a better fit

I had made the naive error of biasing a tool I already knew over a tool that I didn’t know. Worse yet, with a little bit of extra user research in the vendor selection process, I would have been able to see that the biggest gap in our analytics process was a single source of truth for common and repeatable analysis.

After kicking off a 3-month trial with Looker, it became very obvious that Looker’s strengths were much more aligned with the needs of the company:

- Business logic can be more confidently managed by individual analyst teams

Old dashboards that relied on hundred-line SQL queries could have components refactored using LookML. This allowed teams to take ownership of their relevant business logic in a safer way with version control and creating reusable components. - SQL not required, but there if you want it

Looker Explores removed SQL as a prerequisite for doing basic analysis. However, unlike Tableau, if you wanted to dig into the underlying SQL, it’s a just a click away into the generated SQL tab. - Hosted experience

We were more comfortable with Looker’s hosting experience because the data never actually leaves our servers. By outsourcing hosting to Looker, we were still able to maintain a security-compliant environment, while also freeing up our devs to focus more on applications as opposed to table stakes features e.g. setting up email.

Removing the extra bloat of Tableau’s extract model and Looker’s live chat support were extra incentives for us to switch.

Conclusion

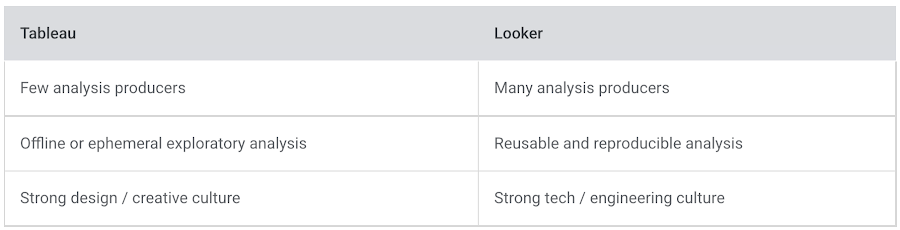

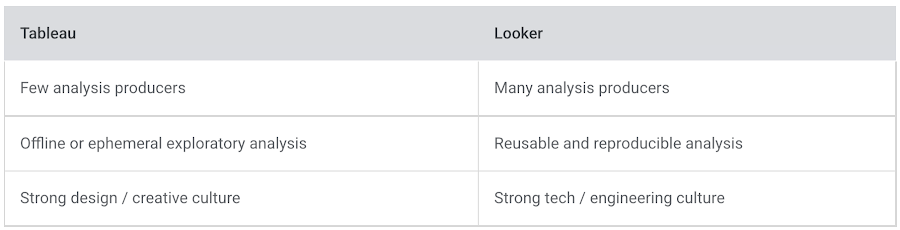

Though on the surface Tableau and Looker seem to be interchangeable tools, they are optimized for vastly different use cases, and it’s important to carefully consider which use case is more important for your organization.

For our use cases of enabling reproducible logic across a highly decentralized analytics community, Looker ended up being the right choice for us in the end.