You do the math: expect the unexpected when AI systems are asked math questions

Out of the recent hubbub at OpenAI came a rumor: the company had created a foundation model that could now do elementary math. Although the ability to perform elementary calculations may seem underwhelming to some, creating an AI system capable of math is a step toward artificial general intelligence (AGI), and something that has been elusive to date.

Some might find this news more confusing than underwhelming. Certainly you can ask ChatGPT a math question and get an answer. What, then, is happening under the hood when you ask ChatGPT “What is 2+2?”?

In this post I want to outline two common approaches for how foundation models—the technology that undergirds AI systems including OpenAI’s ChatGPT—handle math questions presently. In doing so, the goal is to provide a deeper understanding of what foundation models and broader AI systems actually do with prompts, and what we should expect from AI given these capabilities.

In short, when you pose a math question to an AI system, it produces an answer through probabilistic prediction or by use of a special tool. In both cases, the foundation model does not use math functions, but composes coherent responses through language. The result is easy to confuse with math competency. I use the two approaches to illustrate how AI systems are dependably unexpected, that is, produce unexpected outcomes and/or produce outcomes through unexpected means. Taking this quality of AI systems seriously means asking where unexpectedness is tolerable and where it isn’t.

-

As a quick note, I will use the term foundation model throughout this post. There are lots of types of foundation models at this point, where large language models (LLMs) are a common type. In the context of this post, the foundation models I am talking about are those that are trained using at least written language. Some foundation models are trained on images and other data as well (or exclusively). The reason being I am assuming the input and output of an AI system that uses a foundation model is textual.

-

Okay, let's jump in!

Approach 1: Predict what’s next

Most commonly, foundation models handle math questions as language questions about math. A simple way to understand these language capabilities is to think of predictive keyboards.

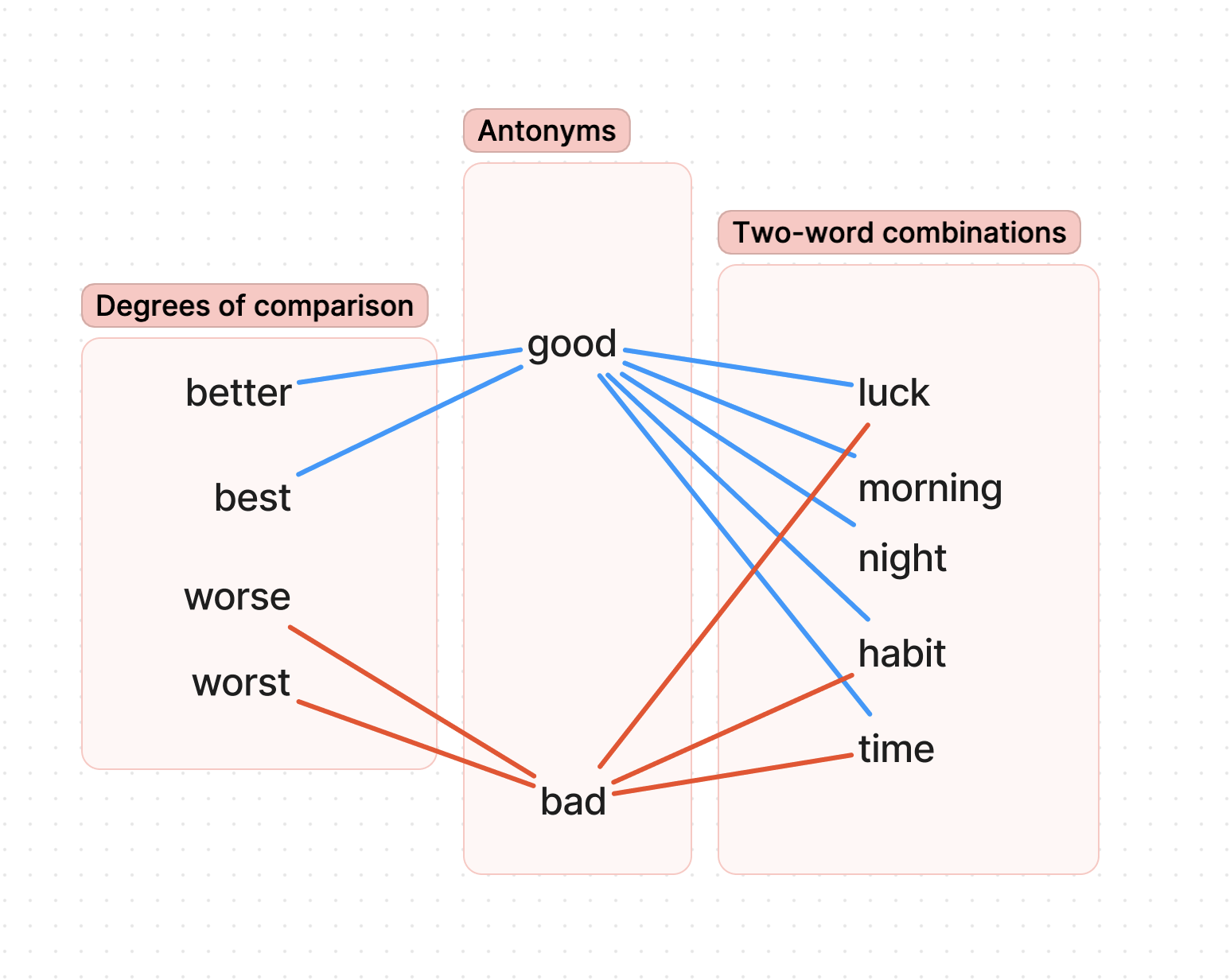

Predictive keyboards, like the ones found on smartphones, use what has just been typed to suggest what word might be needed next. For example, if you type “good”, a predictive keyboard might suggest “morning”, “night”, and “luck”. Perhaps these are great guesses, or you are feeling particularly Shakespearean and are actually typing “good ‘morrow”. The keyboard is basing its suggestions on the likelihood certain words will be useful to the user. As such, the suggestions may or may not be useful in a given circumstance because the keyboard has a limited view into the overall conversation, and therefore a limited view of the user’s intent and context.

Foundation models treat math questions (and really all inputs) in a similar way. The answer “4” is very likely to follow from the sentence “What is 2+2?”

Foundation models are able to handle math as language because they are trained on a massive amount of language data, that is, text in the form of articles, books, webpages, posts, and responses. From these data, foundation models create relationships amongst parts of language.

For example:

- “morning”, “night”, and “luck” are related to “good”

- “good” and “bad” are related to “luck”, “time”, and “habit”

- “good” is related to “better” and “best”; “bad” is related to “worse” and “worst”

These relationships are how foundation models process inputs and produce outputs because language is treated as an array of complexly related symbols. Unlike the comparatively simplistic predictive keyboards, foundation models can take in a greater degree of context (e.g. surrounding words, sentences, and chat history) to process inputs. As such, foundation models are adept at identifying what someone is actually asking, that is, the user’s intent.

For our prompt, a foundation model processes the math question as a sentence and narrows in on the important parts of that sentence. Because models have been trained on language that includes math, the training dataset may include precisely our question, but also questions of a similar or related type.

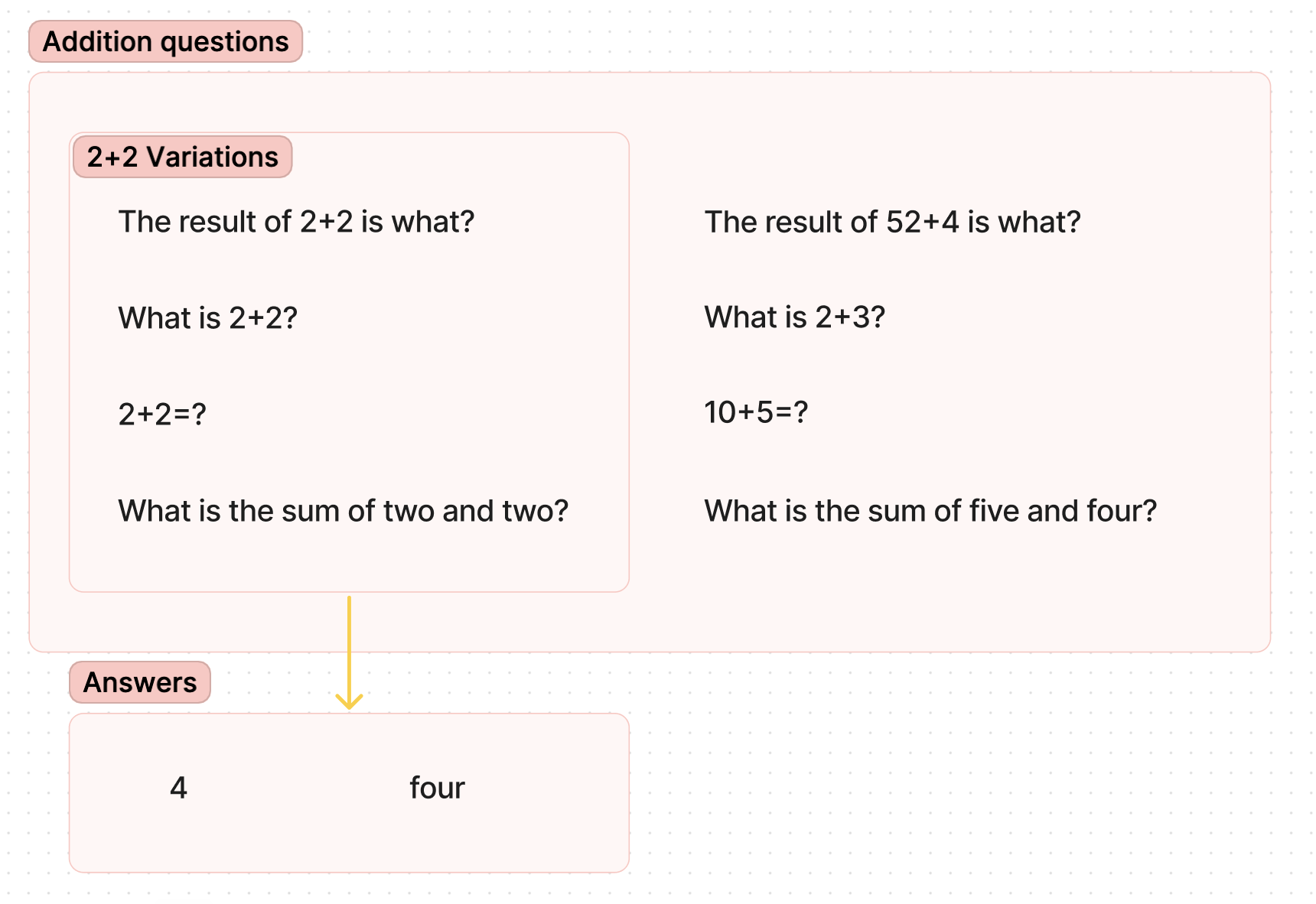

For example, “what is 2+2?” could be stated as “2+2=?” or “What is the sum of two and two?” or “The result of 2+2 is what?” All of these phrasings are asking essentially the same addition question. The model constructs relationships amongst these variations in order to associate the core question with what is most likely to follow: “4” or “four”.

Additionally, “what is 2+2?” is similar to other addition questions in structure, such as “what is 2+3?” or “what is the sum of five and four?” These related phrasings are represented in the model as well. They have a low likelihood being associated with “4” or “four”.

In short, the LLM doesn’t do math, but instead provides a prediction of what should come next after the sequence “What is 2+2?” in a fashion similar to what should come after the word “Good” while texting a friend.

Approach 2: Use a tool

In other instances, AI systems use tools—such as calculators—to handle math questions. Doing what is called orchestration, AI systems combine foundation models with other software to structure how the models process inputs, what outputs are expected, and what is done next. Conceptually, this type of system answers math questions by figuratively reaching for a calculator.

When posing a question to a system using orchestration, the AI system adds additional language called a system prompt. System prompts instruct the foundation models to respond in particular ways. One common system prompt is to instruct the foundation model not to answer the question directly, but to determine the steps to produce a response to the question.

In addition, the orchestration layer often provides the foundation model with a set of tools upon which it can call. These tools may include the ability to search the web, consult Wikipedia, or use a calculator. If the foundation model determines that a step requires a web search, the AI system can do so and bring the result back as input for the next step.

In combination, the AI system produces an answer by using the language and logic processing of the foundation model, and the system prompt and tooling of the orchestration layer.

For our simple math question, a lot is going on behind the scenes. First, the question is processed by the foundation model with the instructions to determine what steps are needed. At this stage, the foundation model classifies the input as, in fact, a math question and that the calculator is the appropriate tool for the job. The AI system uses the calculator tool and then the foundation model decides that it has sufficiently answered the initial question. The final thing to do is respond to the user: “The sum of 2 and 2 is 4.”

So what?

In both of the instances there is one takeaway: foundation models are not doing math.

These approaches are nothing to dismiss, even if they aren’t doing exactly what we think they might be doing. The fact that a foundation model can answer math without knowing math is very impressive. It also leads to presuming that foundation models are more or differently capable than they truly are. In instances where the question asker knows the answer, we might think that foundation models or AI systems are capable in ways they aren’t because we can evaluate the output based on our own knowledge.

But what happens when you pose a question that you don’t know the answer yourself? Therein lies a major problem. AI systems that rely on foundation models are notoriously good at responding. If the prompter doesn’t know what the answer should be or how to determine it themselves, AI systems could lead them astray if they aren’t careful.

Plenty of work is going into both making AI systems that actually do math as well as systems that align with our expectations and principles. Even with all of this work, we should approach AI systems with expectations that are appropriate.

One expectation I think is important is that these systems are “dependably unexpected”. As much as we can ask questions or converse in natural language, foundation models and AI systems writ large are not necessarily operating as we might expect. As such, these systems can—and often do—produce unexpected outcomes or produce outcomes in unexpected ways.

Asking “What is 2+2?” demonstrates this quality of being dependably unexpected. As already explained by the two approaches, foundation models can produce an expected answer through means other than math. But they can also produce unexpected outcomes as well. The question “What is 2+2?” could result in the answers “5” or “A Bob Seger song.” The answer “5” might be the result of a foundation model being trained on a corpus of wrong answers. Or, “5” might be the result of a system set to select answers that have a low likelihood. “A Bob Seger song” might be because the system assumed the wrong context (music rather than math) or used the wrong tool (a web search rather than a calculator). Simply put, when we expect that an AI system is getting any answer—right or wrong—because of an expected process, we are wrong. The beauty and risk of AI is that it doesn’t work as we expect.

Thinking of AI systems and foundation models as dependably unexpected is useful beyond how we use these systems ourselves—this thinking can help frame questions about how, when, and why we build AI systems or use foundation models. Rather than assuming these technologies are good for anything, accepting AI systems as dependably unexpected means asking where unexpectedness is tolerable and even welcomed, and where unexpectedness is not acceptable. In cases where unexpectedness in process or outcome is not tolerable, we need to ask under what conditions, if any, AI systems might be used. In other cases, unexpectedness might be wonderful—say, suggesting ways to approach problem solving or creating images for creative consideration. In both cases, the onus is on those building (with) these technologies to articulate the value of unexpectedness, and to communicate what others might expect from such systems.

For a deeper dive into how foundation models work, check out: “Show your work: what to expect from how LLMs “do” math” by Thomas Lodato