You can use the Vertex AI SDK for Python to view Vertex AI Experiments runs data and compare the runs.

The Google Cloud console provides a visualization of the data associated with these runs.

Get experiment runs data

These samples involve getting run metrics, run parameters, runtime series metrics, artifacts, and classification metrics for a particular experiment run.

Summary metrics

Python

run_name: Specify the appropriate run name for this session.experiment: The name or instance of this experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.project: Your project ID. You can find these in the Google Cloud console welcome page.location: See List of available locations.

Parameters

Python

run_name: Specify the appropriate run name for this session.experiment: The name or instance of this experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.project: Your project ID. You can find these in the Google Cloud console welcome page.location: See List of available locations.

Time series metrics

Python

run_name: Specify the appropriate run name for this session.experiment: The name or instance of this experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.project: Your project ID. You can find these in the Google Cloud console welcome page.location: See List of available locations.

Artifacts

Python

run_name: Specify the appropriate run name for this session.experiment: The name or instance of this experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.project: Your project ID. You can find these in the Google Cloud console welcome page.location: See List of available locations.

Classification metrics

Python

run_name: Specify the appropriate run name for this session.experiment: The name or instance of this experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.project: Your project ID. You can find these in the Google Cloud console welcome page.location: See List of available locations.

Compare runs

Using the Vertex AI SDK for Python, you can retrieve the data associated with your experiment. The data for the experiment runs is returned in a DataFrame.

Compare runs

The data for the experiment runs is returned in a DataFrame.

Python

experiment_name: Provide a name for the experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.project: Your project ID. You can find these IDs in the Google Cloud console welcome page.location: See List of available locations.

Google Cloud console

Use the Google Cloud console to view details of your experiment runs and compare the experiment runs to each other.

View experiment run data

- In the Google Cloud console, go to the Experiments page.

Go to Experiments.

A list of experiments associated with a project appears. - Select the experiment containing the run that you want to check.

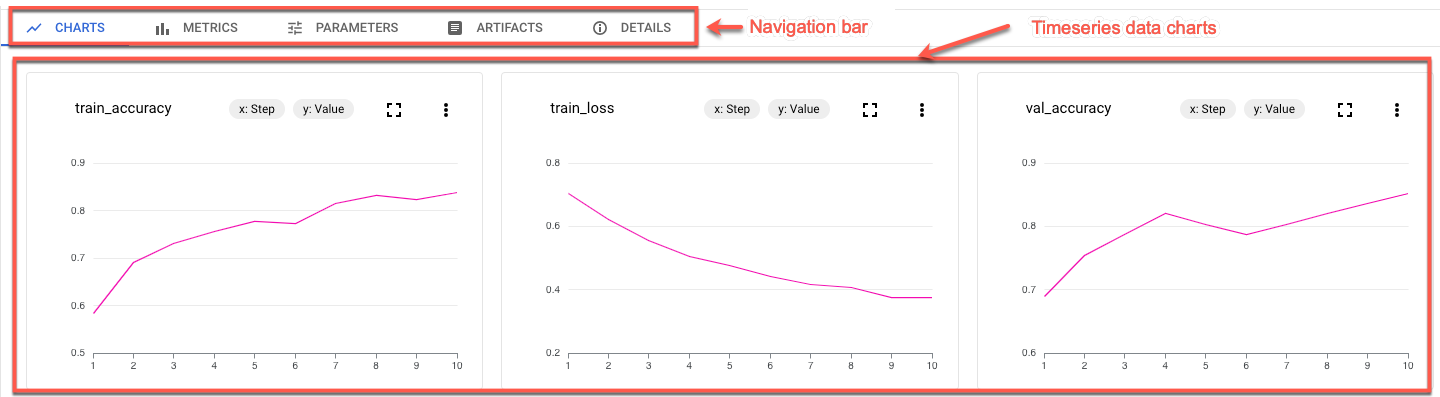

A list of runs, timeseries data charts, and a metrics and parameters data table appear. Notice, in this case, three runs are selected, but only two lines appear in the timeseries data charts. There is no third line because the third experiment run does not have any timeseries data to display.

- Click the name of the run to navigate to its details page.

The navigation bar and timeseries data charts appear.

- To view metrics, parameters, artifacts, and details for your selected run,

click the respective buttons in the navigation bar.

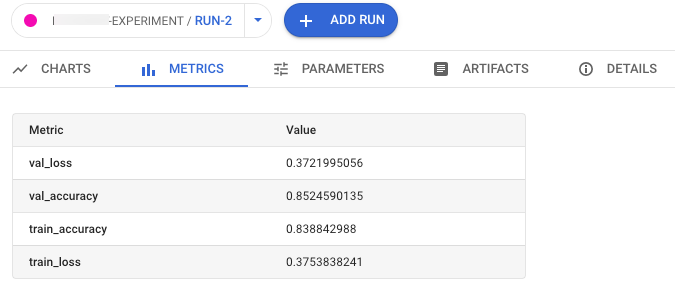

- Metrics

- Parameters

- Artifacts

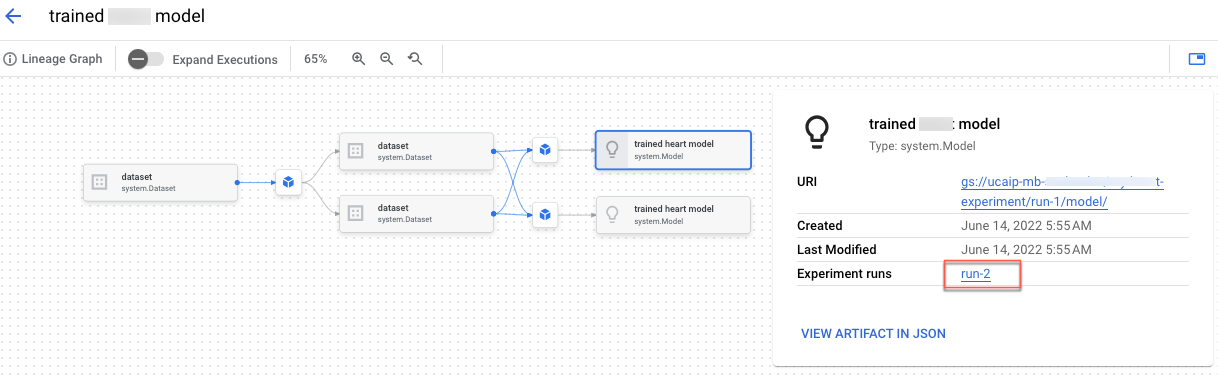

To view artifact lineage, click the Open artifact in Metadata Store link. The lineage graph associated with the run appears.

- Details

- Metrics

To share the data with others, use the URLs associated with the views. For example, share the list of experiment runs associated with an experiment:

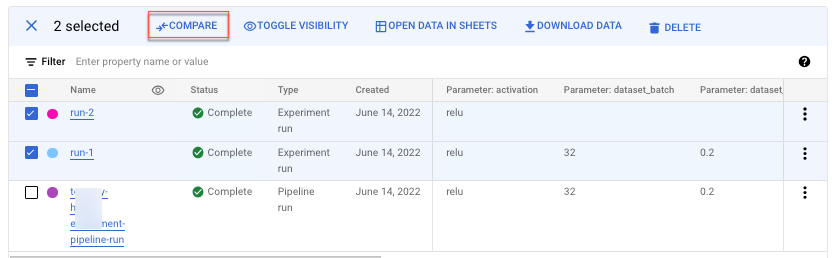

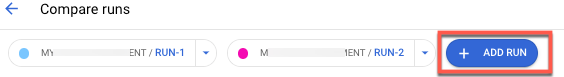

Compare experiment runs

You can select runs to compare both within an experiment and across experiments.

- In the Google Cloud console, go to the Experiments page.

Go to Experiments.

A list of experiments appears. - Select the experiment containing the runs that you want to compare. A list of runs appears.

- Select the experiment runs that you want to compare. Click Compare.

By default, charts appear comparing timeseries metrics of the selected experiment runs.

- To add additional runs from any experiment in your project, click Add run.

To share the data with others, use the URLs associated with the views. For example, share the comparison view of timeseries metrics data:

See Create and manage experiment runs for how to update the status of a run.