Running machine learning in the cloud for live service games

Patrick Smith

Head of Specialist Customer Engineers, Google Cloud for Games

Dan Zaratsian

Tech Lead, AI/ML Solutions Architect, Google Cloud for Games

Generative AI has become the number one technology of interest across many industries over the past year. Here at Google Cloud for Games, we think that online game use cases have some of the highest potential for generative AI, giving creators the power to build more dynamic games, monetize their games better, and get to market faster. As part of this, we’ve explored ways that games companies can train, deploy, and maintain GenAI utilizing Google Cloud. We'd like to walk you through what we’ve been working on, and how you can start using it in your game today. While we’ll focus on gen AI applications, the framework we’ll be discussing has been developed with all machine learning in mind, not just the generative varieties.

Long term, the possibilities of gen AI in Games are endless, but in the near term, we believe the following are the most realistic and valuable to the industry over the next 1-2 years.

- Game production

- Adaptive gameplay

- In-game advertising

Each of these helps with a core part of the game development and publishing process. Generative AI in game production, mainly in the development of 2D textures, 3D assets, and code, can help decrease the effort to create a new game, decrease the time to market, and help make game developers more effective overall. Thinking towards sustaining player engagement and monetizing existing titles, ideas like adaptive dialogue and gameplay can keep players engaged, and custom in-game objects can keep them enticed. In-game advertising opens a new realm of monetization, and allows us not only the ability to hyper-personalize ads to views, but to personalize their placement and integration into the game, creating seamless ad experiences that optimize views and engagement. If you think about the time to produce a small game, never mind a AAA blockbuster, development of individual game assets consumes an immense amount of time. If generative models can help reduce developer toil and increase the productivity of studio development teams by even a fraction, it could represent a faster time to market and better games for us all.

As part of this post, we introduce our Generative AI Framework for Games, which provides templates for running gen AI for games on Google Cloud, as well as a framework for data ingest and storage to support these live models. We walk you through a demo of this framework below, where we specifically show two cases around image generation and code generation in a sample game environment.

But before we jump into what we’re doing here at Google Cloud, let’s first tackle a common misconception about machine learning in games.

Cloud-based ML plus live services games are a go

It’s a common refrain that running machine learning in the cloud for live game services is either cost prohibitive or prohibitive in terms of the induced latency that the end user experiences. Live games have always run on a client-server paradigm, and it’s often preferable that compute-intensive processes that don’t need to be authoritative run on the client. While this is a great deployment pattern for some models and processes, it’s not the only one. Cloud-based gen AI, or really any form of AI/ML, is not only possible, but can result in significantly decreased toil for developers, and reduced maintenance costs for publishers, all while supporting the latencies needed for today’s live games. It’s also safer — cloud-based AI safeguards your models from attacks, manipulation, and fraud.

Depending on your studio’s setup, Google Cloud can support complete in-cloud or hybrid deployments of generative models for adaptive game worlds. Generally, we recommend two approaches depending on your technology stack and needs;

- If starting from scratch, we recommend utilizing Vertex AI’s Private Endpoints for low latency serving, which can work whether you are looking for a low ops solution, or are running a service that does not interact with a live game environment.

- If running game servers on Google Cloud, especially if they are on Google Kubernetes Engine (GKE), and are looking to utilize that environment for ultra-low latency serving, we recommend deploying your models on GKE alongside your game server.

Let’s start with Vertex AI. Vertex AI supports both public and private endpoints, although for games, we generally recommend utilizing Private Endpoints to achieve the appropriate latencies. Vertex AI models utilize what we call an adaptor layer, which has two advantages: you don’t need to call the entire model when making a prediction, and any fine tuning conducted by you, the developer, is contained in your tenant. Compared to running a model yourself, whether in the cloud or on prem, this negates the need to handle enormous base models and the relevant serving and storage infrastructure to support them. As mentioned, we’ll show both of these in the demo below.

If you’re already running game servers on GKE, you can gain a lot of benefit from running both proprietary and open-source machine learning models on GKE as well as taking advantage of GKE’s native networking. With GKE Autopilot, our tests indicate that you can achieve prediction performance in the sub-ms range when deployed alongside your game servers. Over the public internet, we’ve achieved low millisecond latencies that are consistent, if not better, with what we have seen in classic client side deployments. If you’re afraid of the potential cost implications of running on GKE, think again — the vast majority of gaming customers see cost savings from deploying on GKE, alongside a roughly 30% increase in developer productivity. If you manage both your machine learning deployments and your game servers with GKE Autopilot, there’s also a significant reduction in operational burden. In our testing, we’ve found that whether you are deploying models on Vertex or GKE, the cost is roughly comparable.

Unified data platforms enable real-time AI

AI/ML driven personalization thrives on large amounts of data regarding player preferences, gameplay, and the game’s world and lore. As part of our efforts in gen AI in games, we’ve developed a data pipeline and database template that utilizes the best of Google Cloud to ensure consistency and availability.

Live games require strong consistency, and models,whether generative or not, require the most up-to-date information about a player and their habits. Periodic retraining is necessary to keep models fresh and safe, and globally available databases like Spanner and BigQuery ensure that the data being fed into models, generative or otherwise, is kept fresh and secure. In many current games, users are fragmented by maps/realms, with hard lines between them, keeping experiences bounded by firm decisions and actions. As games move towards models where users inhabit singular realms, these games will require a single, globally available data store. In-game personalization also requires the live status of player activity. A strong data pipeline and data footprint is just as important for running machine learning models in a liveops environment as the models themselves. Considering the complexity of frequent model updates across a self-managed data center footprint, we maintain it’s a lighter lift to manage the training, deployment, and overall maintenance of models in the cloud.

By combining a real-time data pipeline with generative models, we can also inform model prompts about player preferences, or combine them with other models that track where, when, and why to personalize the game state. In terms of what is available today, this could be anything from pre-generated 3D meshes that are relevant to the user, retexturing meshes to different colors, patterns or lighting to match player preferences or mood, or even the giving the player the ability to fully customize the game environment based natural language. All of this is in service of keeping our players happy and engaged with the game.

Demoing capabilities

Let’s jump into the framework. For the demo, we’ll be focusing on how Google Cloud’s data, AI, and compute technology can come together to provide real-time personalization of the game state.

The framework includes:

- Unity for the client and server

Open source:

- Terraform

- Agones

Google Cloud:

- GKE

- Vertex AI

- Pub/Sub

- Dataflow

- Spanner

- BigQuery

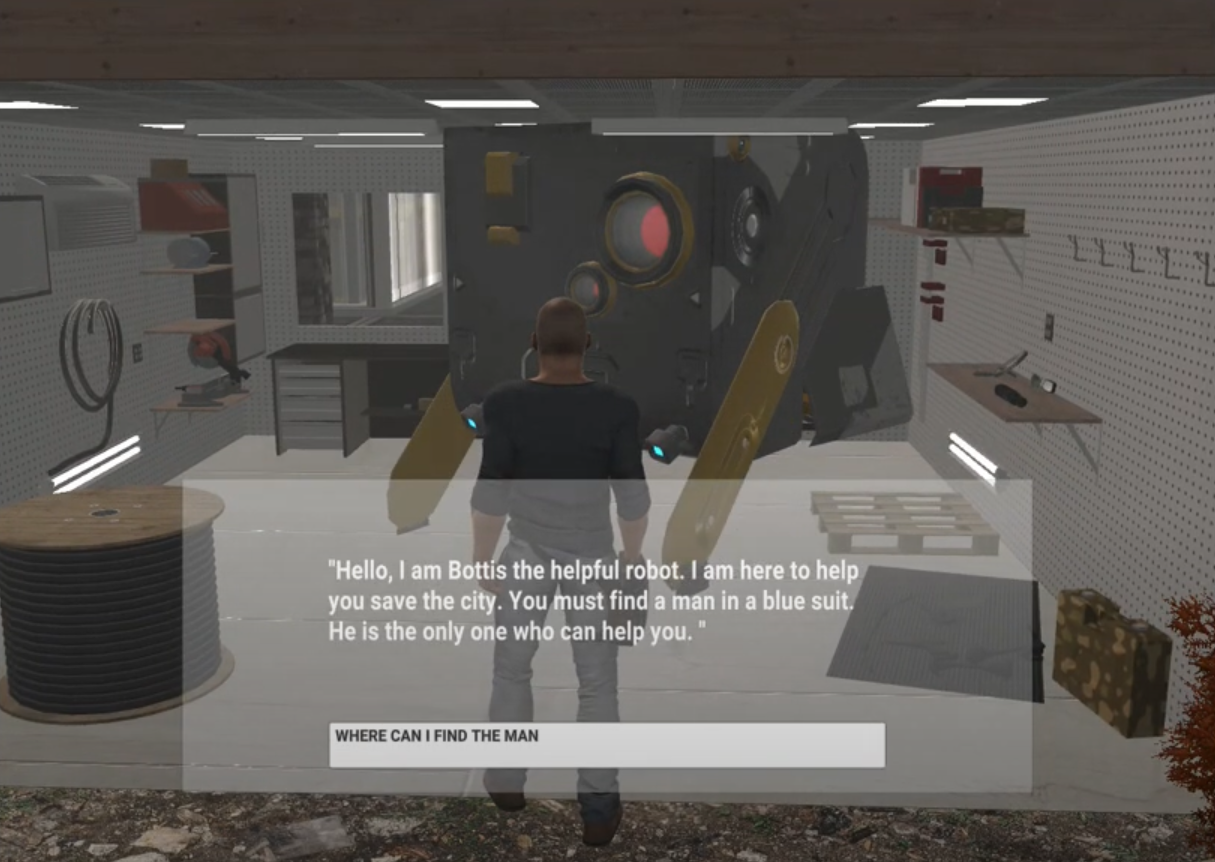

As part of this framework, we created an open-world demo game in Unity that uses assets from the Unity store. We designed this to be an open world game — one where the player needs to interact with NPCs and is guided through dynamic billboards that assist the player in achieving the game objective.. This game is running on GKE with Agones, and is designed to support multiple players. For simplicity, we focus on one player and their actions.

Now, back to the framework. Our back-end Spanner database contains information on the player and their past actions. We also have data on their purchasing habits across this make-believe game universe, with a connection to the Google Marketing Platform. This allows us in our demo game to start collecting universal player data across platforms. Spanner is our transactional database, and BigQuery is our analytical database, and data flows freely between them.

As part of this framework, we trained recommendation models in Vertex AI utilizing everything we know about the player, so that we can personalize in-game offers and advertising. For the sake of this demo, we’ll forget about those models for a moment, and focus on two generative AI use cases: image generation, NPC chat, and code generation for our adaptive gameplay use case. To show you both deployment patterns that we recommend for games, deploying on GKE alongside the game server, and utilizing Vertex AI. For image generation, we host an open-source Stable Diffusion model on GKE, and for code generation and NPC chat we’re using the gemini-pro model within Vertex AI. In cases where textures need to be modified or game objects are repositioned, we are using the Gemini LLM to generate code that can render, position, and configure prefabs within the game environment.

As the character walks through the game, we adaptively show images to suggest potential next moves and paths for the player. In practice, these could be game-themed images or even advertisements. In our case, we display images that suggest what the player should be looking for to progress game play.

In the example above, the player is shown a man surrounded by books, which provides a hint to the player that maybe they need to find a library as their next objective. That hint also aligns with the riddle that the NPC shared earlier in the game. If a player interacts with one of these billboards, which may mean moving closer to it or even viewing the billboard for a preset time, then the storyline of our game adapts to that context.

We can also load and configure prefabs on the fly with code generation. Below, you’ll see our environment as is, and we ask the NPC to change the bus color to yellow, which dynamically updates the bus color and texture.

Once we make the request, either by text or speech, Google Cloud GenAI models generate the exact code needed to update the prefab in the environment, and then renders it live in the game.

While this example shows how code generation can be used in-game, game developers can also use a similar process to place and configure game objects within their game environment to speed up game development.

If you would like to take the next step and check out the technology, then we encourage you to explore the Github link and resources below.

Additionally, we understand that not everyone will be interested in every facet of the framework. That's why we've made it flexible – whether you want to dive into the entire project or just work with specific parts of the code to understand how we implemented a certain feature, the choice is yours.

If you're looking to deepen your understanding of Google Cloud generative AI, check out this curated set of resources that can help:

Last but not least, if you’re interested in working with the project or would like to contribute to it, feel free to explore the code on Github, which focuses on the GenAI services used as part of this demo: