eBay accelerates its AI-driven recommendation engine with Vertex AI

Yuri M. Brovman

Senior Manager, Applied Research and Engineering, eBay

At any given time, eBay’s marketplace has more than 1.9 billion listings from millions of different sellers, running the gamut from the latest gadgets to the most obscure collectibles. Using deep machine learning (ML), AI-driven search capabilities, and natural language processing (NLP), we have streamlined the user experience, making it easier to explore and find relevant products on our platform.

By implementing Google Cloud Vertex AI and Vector Search, we accelerated engineering velocity and generated more advanced models to deliver better results to our customers. With these solutions in place, we can iterate four times faster and have put new features in motion that we otherwise wouldn't have been able to launch, resulting in reduced friction for both buyers and sellers on our platform.

Bridging the gap between on-premises and public cloud

As one of the largest online global marketplaces, eBay has spent more than 28 years analyzing, learning from, and evolving alongside the customer journey. In our mission to reinvent the future of e-commerce for enthusiasts, we’ve built a robust in-house infrastructure for our ML processes using state-of-the-art solutions, including an ML GPU cluster, Vector Similarity Engine, and many other innovations described in the eBay Tech Blog. Furthermore, we represent the Ads Recommendations team, or “Recs” team for short, an innovative and mature recommender systems team (RecSys 2016 paper, RecSys 2021 paper, eBay Tech Blog 2022 post, eBay Tech Blog Jan 2023 post, eBay Tech Blog Oct 2023 post). In order to accelerate innovation and deliver velocity even further, we began to explore how to augment specific components in our ML workflow with additional capabilities from Google Cloud.

We turned to a third-party cloud provider to support our on-premises technologies, keeping three main criteria in mind: advanced AI capabilities, limitless scalability, as well as a mature and reliable infrastructure stack. We focused our efforts on Vertex AI, a platform that lets us train and deploy ML models, as well as quickly deploy sets of embeddings based on textual information of item and user entities with Vector Search. Given the maturity of our Recs team’s ML infrastructure, we did not want or need to replace our whole ML flow all at once. Instead, we appreciated the flexibility of Vertex AI, which allowed us to incorporate specific training and prediction components of our ML flow using Google Cloud in a plug-and-play manner.

We explored Vector Search for deep-learning-based semantic embeddings for eBay listings. Vector Search can scour billions of semantically similar or semantically related items, helping our engineers iterate rapidly and give customers quicker access to the products they’re seeking. With this tool, our use of embeddings is not limited to words or text. We can generate semantic embeddings for many kinds of data, including images, audio, video, and user preferences.

Our ML engineers develop many ML embedding models as they iterate on their hypotheses for improved features. Any time there is a change in the ML model, even when it's a small feature or a new version, the vector space of embeddings changes and therefore a new vector search index needs to be created. We wanted to highlight that the capability of Google Cloud Vector Search for fast onboarding of new embeddings indexes, in a self-service capability, is very important for rapid ML model iteration. Rapid ML model iteration is ultimately what brings value to the end user by an improved recommendations experience.

As an applications team, we do not own all levels of the production infrastructure stack, particularly the low-level network connectivity component. That’s where the Google network infrastructure team stepped in to collaborate with eBay’s network infrastructure team to build a hybrid interconnect link between eBay’s data center and Google Cloud. The Google Customer team was particularly helpful enabling this component by connecting our Recs team to relevant stakeholders across Google and eBay, and even connected us to teams at eBay we have not previously worked with. Once the network connection was established, we began experimenting with different setups and APIs to create a framework that would meet our team’s and our customers’ needs.

Test, rinse, repeat

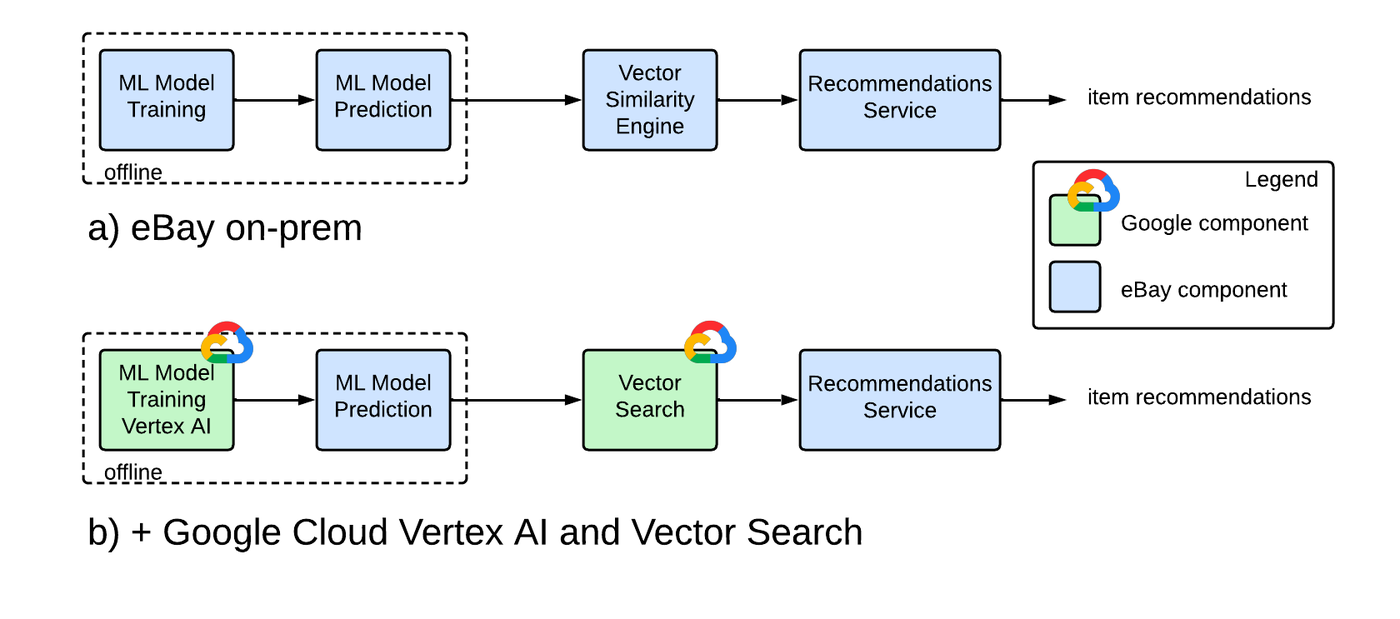

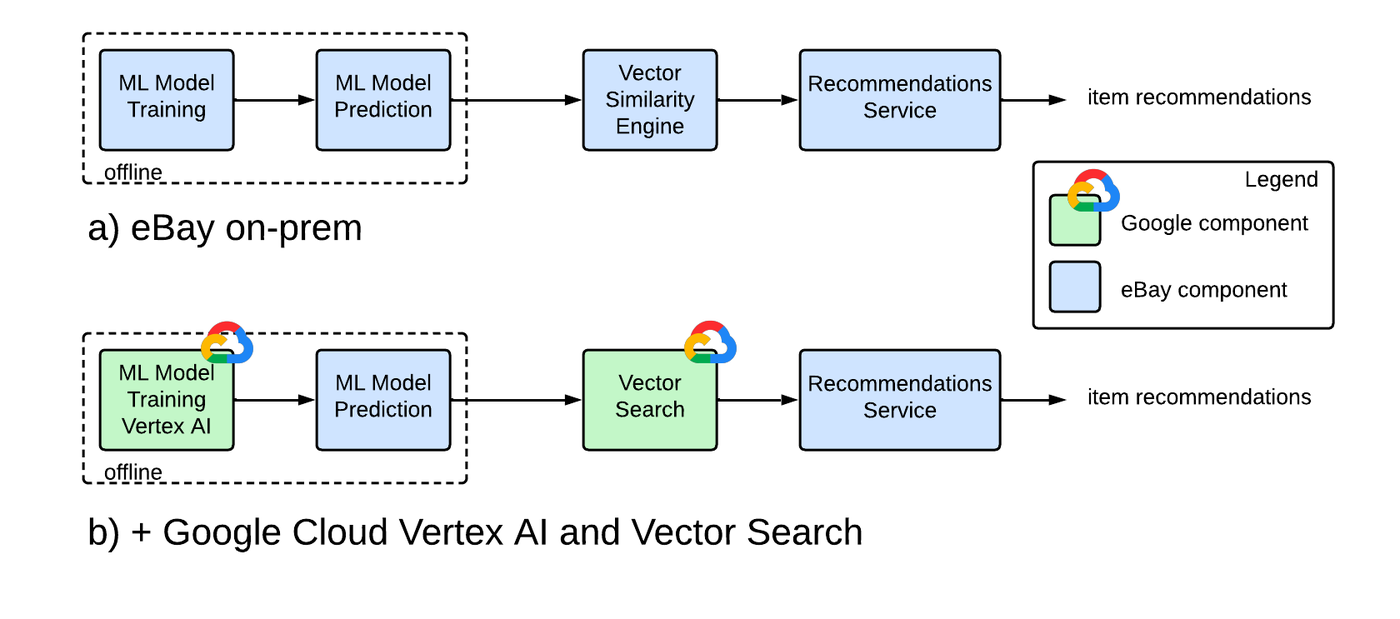

To create a more robust and intuitive recommendations engine, we began by building a custom model on Vertex AI and indexing our embeddings into Vector Search, iterating and testing to evaluate the system’s effectiveness (see Figure 1). With our hybrid interconnect link in place, we took the same index that we used with our in-house system, put it on our Google Cloud infrastructure, and ran an apples-to-apples comparison. Since the data was the same, we expected a neutral result — and that’s what we got with our initial A/B test. But while the real-time performance was on par with what we had previously achieved, the onboarding process was much smoother and faster than our on-prem equivalent.

Figure 1: ML engineering architecture diagram for the Recs team in eBay for a) eBay on-prem only components, and b) including the addition of Google Cloud Vertex AI and Vector Search components.

We used to spend a lot of our engineering cycles on creating benchmarks for our in-house models, and onboarding new indexes would take months. Now, with Vector Search, we can complete this process in two weeks. After optimizing and tweaking the model, we ran another A/B test with Vector Search that showed a statistically significant positive revenue uplift, proving the long-term value of our Google Cloud implementation. We launched this feature, which demonstrated positive return on investment for ads revenue lift and cloud costs, to serve production traffic with several thousand transactions per second. Throughout this process, we have worked hand in hand with Google Cloud, to create the best customer experience possible.

For example, eBay has SLA latency requirements for real-time applications that serve recommendations. The Google Cloud team listened to our requirements and helped us refine our Vector Search configuration to hit a real-time read latency of less than 4ms at 95%, as measured server-side on the Google Cloud dashboard for Vector Search.

Creating next-generation shopping experiences with Google Cloud

eBay has been using tech-enabled recommendation generation systems to create better customer experiences for years, but “good enough” has never been part of our vocabulary. We’re always looking for innovative ways to use new technologies to improve our platform and bolstering our in-house system with Google Cloud is helping us get there.

The advanced AI capabilities and excellent client support from Google Cloud have enabled our team to accelerate iterations of ML workstreams, ultimately delivering an enhanced shopping experience for eBay buyers. Our platform can now better analyze, anticipate, and deliver more relevant recommendations, simplifying the process of finding and purchasing items online.

As we look ahead and continue to explore more generative AI tools from Google Cloud, such as Gemini, we’re excited to see what the next iteration of eBay will deliver. With Google Cloud, we have created the right hybrid cloud environment with the AI resources needed to elevate the buyer’s journey beyond what was previously possible.